| Title | Metacomputing Project |

| Participiants | Attila Caglar Michael Griebel Frank Koster Guido Spahn Gerhard Zumbusch |

| Key words | Metacomputing, Parallel Computing, Message Passing Interface (MPI), Globus Toolkit, Gigabit/s High Speed Networks, Computational Grid |

| Description |

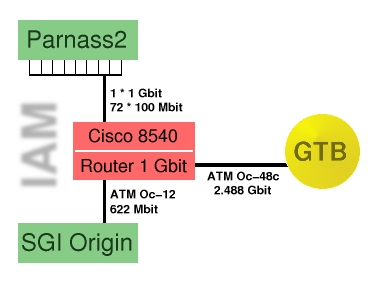

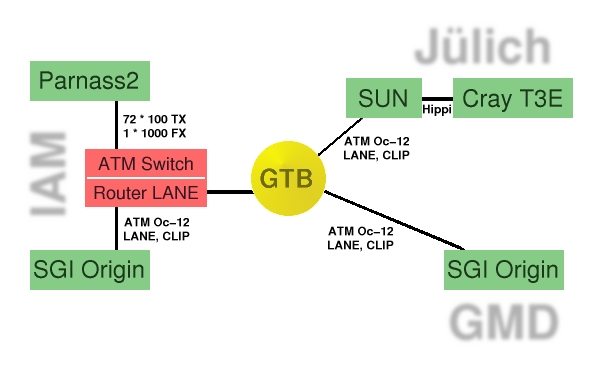

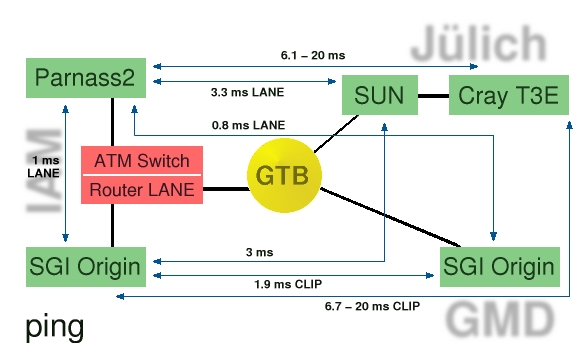

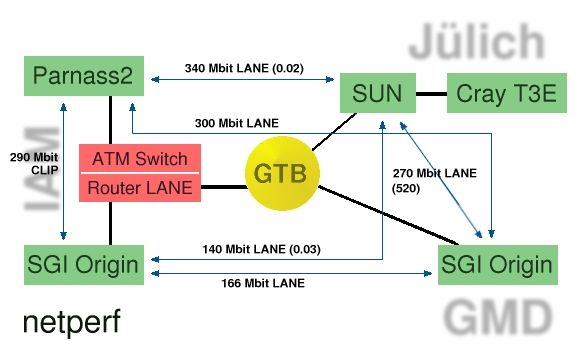

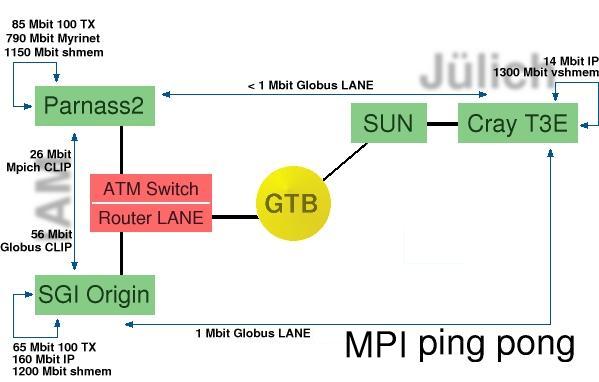

The aim of the project was to run parallel jobs distributed over a hererogenous collection of computational resources, communicating over a heterogenous network. The main tool was MPICH-G , an implementation of the MPI standard, layered on top of the Globus Metacomputing Toolkit, which provides functionalities for authentication, job startup, job control and especially for multimethod communication in heterogenous computational grids. At the Institute of Applied Mathematics (IAM) we used to different platforms: Parnass2 , a cluster of Intel-Pentium II based PCs running Linux 2.2 and a SGI Origin. Figure 1 shows the local network and the connection to the Gigabit Testbed (GTB). We expanded the Globus startup machanism to utilize the local scheduler for Parnass2 by replacing Globus' standard job control scripts by adopted scripts performing the additional work. In order to perform some metacomputing test on a geographically distributed computational grid, we used in addition a Cray T3E at the Central Institute for Applied Mathematics , Forschungszentrum Jülich (Jülich), connected over a gateway (SUN) to the Gigabit Testbed. The complete computational grid is the following:  Network Performance TestsBefore starting various applications based on MPICH-G and heterogenous MPICH, we did several simple performance test to examine latency and bandwidth and observed very bad results for connections between the Cray T3E and other computing nodes.These are the results from standard ping and netperf:

The following ping-pong test sends packages of different sizes between two procs using standard MPI routines. The heterogenous version of MPICH (Mpich) and MPICH-G (Globus) are used:  Figure 5 illustrates, that Globus is a good choice for cluster computers (Figure 6, on the left), but a bad choice for the Cray T3E (on the right). This fact is based on the centralized IO of the Cray T3E: Only a view IP connections can be opened simultaneously and are routed over one single IO node. On the other hand, implementations like MetaMPI, which open a constant number of inter-site connections are a good choice for the T3E but a bad one for our Linux-Cluster, because of the more restricted IP performance of a single PC node (see Figure 7, too).

Tests in PracticeThe aim of the project was to run parallel applications on the heterogenous computational grid.Here are the results from a simulation of Nanotubes . The histograms show the results for several combinations of Parnass2 and Origin procs, number of Parnass2 procs incresing from right to left unless otherwise stated.

And finaly more briefly execution times of "KRUSTENFLUIDE" (LINK?!)

|

| Bibliography |

Awarded Second Prize at the SuParCup '99 Competition, Supercomputer '99 , Mannheim.

|

| Related Projects |

|

| In cooperation with | Central Institute for Applied Mathematics, KFA Juelich |